Harnessing the full potential of Gen AI requires more than just powerful algorithms—it demands a strategic and well-structured environment known as the Gen AI Landing Zone. This foundational setup not only supports the deployment and scaling of AI applications but also ensures robust governance, security, and collaboration across teams.

In this blog post, we delve into what constitutes a Gen AI Landing Zone and its key components.

Azure AI Landing Zone with Terraform

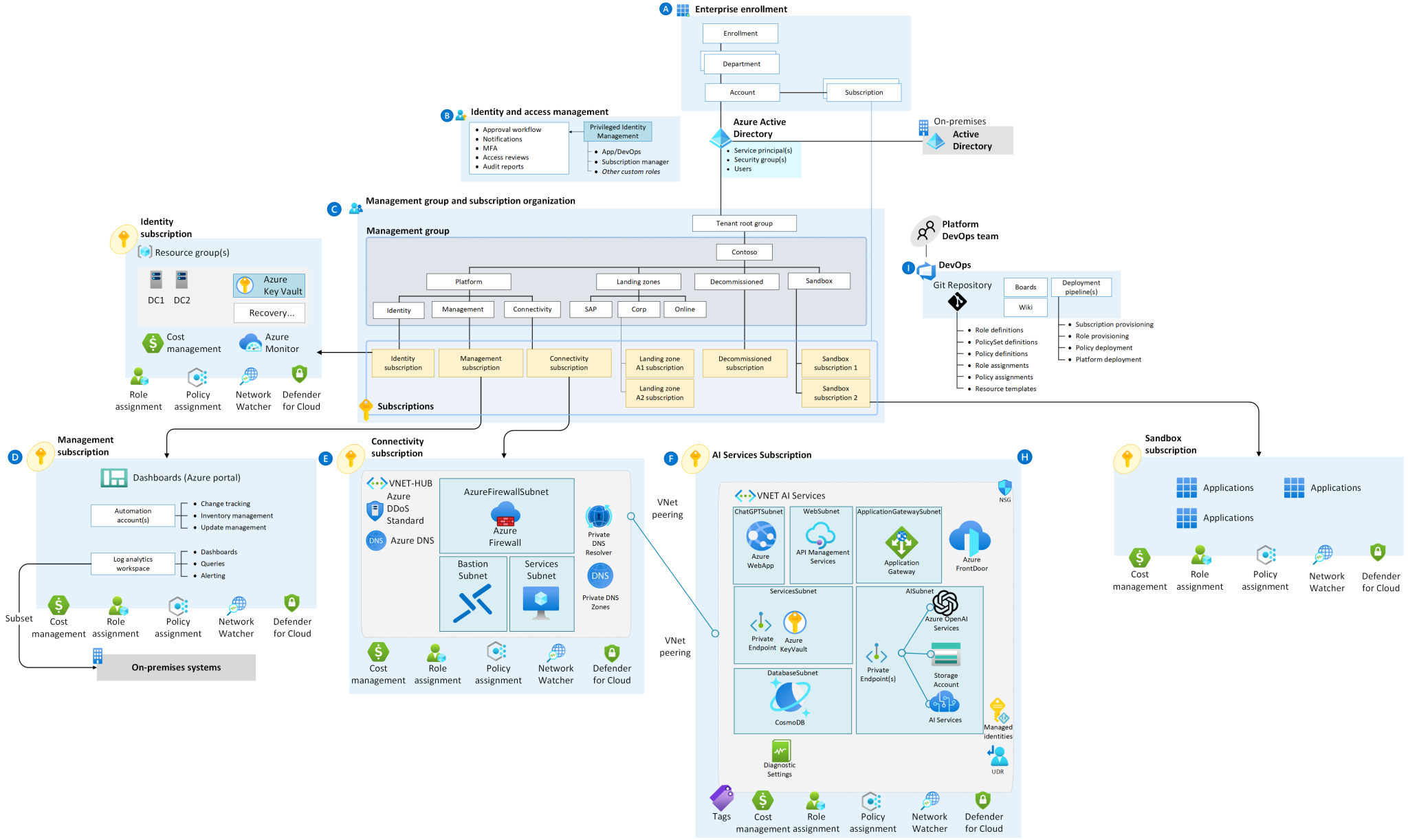

Lets see about the below architecture scenario:

In this scenario, the user deploys a standard landing zone architecture to ensure a well-organized and secure Azure environment. The architecture includes the following components and steps:

Standard Landing Zone: The deployment begins with setting up a standard Azure Landing Zone. This Landing Zone follows best practices and guidelines for organizing resources and aligning with security and compliance standards.

Internal Network Access: Users can access an Azure App Service from within the internal network. To achieve this, a jumpbox or bastion host is used as an entry point to the Azure network. Users connect to the jumpbox securely, and from there, they can access other resources within the Azure network.

Azure App Service with Private Link: The Azure App Service is configured to use a private link. This private link ensures that the web application is only accessible over a private network, enhancing security and minimizing exposure to the public internet.

Azure OpenAI Integration: The web application deployed in the Azure App Service needs to connect to Azure OpenAI. To achieve secure communication, it uses a private endpoint for Azure OpenAI. Private endpoints allow services to be accessed privately within a virtual network, reducing exposure to the public internet.

Firewall Rules for Azure OpenAI: Since the Azure App Service’s outbound IP address is dynamic and public, it requires additional configuration on the Azure OpenAI side. Firewall rules are set up in Azure OpenAI to allow communication from the Azure App Service’s dynamic IP address. This ensures that the web application can establish a secure connection with Azure OpenAI while maintaining a private and secure network architecture.

Overall, this architecture scenario emphasizes security and isolation by utilizing private endpoints, restricting access to internal networks, and carefully managing communication between the Azure App Service and Azure OpenAI. It allows users to securely access the web application within the Azure network while ensuring that external connections are controlled and protected.

AI Landing Zone Features:

Sample Private GPT Web Application

- A sample web application that connects to OpenAI and utilizes CosmosDB for storing conversation history.

- Provides the ability to connect to Cognitive Search for using custom indexed data.

Linux Web App Deployment

- Deploys the web application inside a Linux web app using Azure App Service.

- Ensures a scalable and reliable hosting environment.

Private Link for OpenAI Service

- Establishes a Private Link for the OpenAI service.

- Implements firewalling rules to allow secure communication between the web app and OpenAI.

Application Gateway and WAF Deployment

- Deploys an Application Gateway with Web Application Firewall (WAF) to securely expose your chat web app to the internet.

- Enhances security and protection against common web application attacks.

Azure API Management (APIM) Integration (Work in Progress)

- Integration with Azure API Management (APIM) is in progress, allowing for comprehensive API management and monitoring.

Routing and VNET Integration

- Establishes routing and integrates with Virtual Network (VNET) for secure communication and isolation.

- Utilizes Network Security Groups (NSGs) to control traffic and enhance network security.

Integration with Main Landing Zone

- Integrates seamlessly with the main landing zone using network peering.

- Provides a consistent and cohesive infrastructure for AI workloads within the broader Azure environment.

Deploy AI Landing Zone with Terraform with below steps

- Establish Connectivity:

Begin by creating a file named terraform.tfvars in the /Landing_Zones/ directory. Replace `<your connectivity subscription>` with your actual subscription ID in the connectivity_subscription field. Adjust settings in the file /Landing_Zone/settings.connectivity.tf according to your specific requirements. Proceed to authenticate to Azure using az login.

Example terraform.tfvars

connectivity_subscription = “your_subscription_Id”

management_subscription = “your_management_subscription_Id”

identity_subscription = “your_subscription_Id”

ai_lz_subscription = “your_subscription_Id”

location = “eastus”

email_security_contact = “your emailid”

log_retention_in_days = 30

management_resources_tags = {}

scope_management_group = “<Your Management Group Name>”

spoke_peerings =[“/subscriptions/<subscription_id>/resourceGroups/rg-network/providers/Microsoft.Network/virtualNetworks/vnet-ai-lz”] #Update this after deploying Spoke and then redeploy LZ

IMPORTANT: Modify the parameter near line 196 in the file settings.connectivity.tf to match your target region:

advanced = {

custom_settings_by_resource_type = {

azurerm_subnet = {

connectivity = {

canadaeast = { # <=== Replace your desired location

inboundsubnetdns = {

2. Initialize and Review Deployment

Open your Azure command line interface and navigate to the /Landing_Zone directory.

Execute terraform init -reconfigure to initialize the Terraform repository using local state.

Preview the deployment with terraform plan -var-file=”terraform.tfvars”.

3 Deploy the Connectivity Infrastructure

Execute terraform apply -var-file=”terraform.tfvars” to deploy the connectivity infrastructure for the landing zone.

4. Deploy AI Workloads

- Navigate to the /Workload/AI directory.

- Create a file named terraform.tfvars in the /Workload/AI directory.

- Replace `<your connectivity subscription>` and `<your AI subscription>` with your respective subscription IDs.

- Copy the ID of your hub VNet deployed during the landing zone and paste it into the hub_vnet_id field.

Follow the same steps as above to deploy the AI workloads.

Example terraform.tfvars:

connectivity_subscription = “<Subscription_id>”

ai_subscription = “<AI_Subscriptioid>”

hub_vnet_id = “/subscriptions/<Subscription_id>/resourceGroups/es-connectivity-eastus/providers/Microsoft.Network/virtualNetworks/es-hub-eastus”

hub_dns_servers =[“10.100.1.132″,”168.63.129.16”]

open_ai_private_dns_zone_id=”/subscriptions/<AI_Subscription_id>/resourceGroups/es-dns/providers/Microsoft.Network/privateDnsZones/privatelink.openai.azure.com” app_service_private_dns_zone_id=”/subscriptions/<Subscription_id>/resourceGroups/es-dns/providers/Microsoft.Network/privateDnsZones/privatelink.azurewebsites.net”

deploy_apim = false